When working with PySpark, writing code might come easy—but understanding how Spark handles data behind the scenes is just as important. Specifically, knowing how Spark stores data internally can help you write more efficient and optimized applications.

In this article, we'll dive into the different storage levels available in Spark, and why they're important. Let’s get started!

By default, Spark doesn’t store data in memory. Instead, it recomputes the entire transformation chain each time an action (like collect(), count(), etc.) is triggered. This approach works fine for one-time use, but it becomes inefficient if you reuse the same computation multiple times in your code.

To avoid repeated computation and improve performance, Spark provides two powerful methods: cache() and persist(). These allow you to store intermediate results either in memory or on disk, making subsequent access much faster.

So the real question is: how should we store data in Spark, and what are the best options available?

Let’s explore the different storage levels Spark supports:

- DISK_ONLY

- DISK_ONLY_2

- MEMORY_ONLY

- MEMORY_ONLY_2

- MEMORY_AND_DISK

- MEMORY_AND_DISK_2

DISK_ONLY:

The DISK_ONLY storage level in Spark stores data exclusively on disk, without using any of the available memory. This option is particularly helpful when you're working with large datasets that exceed the capacity of your system’s memory.

Since it doesn’t occupy memory, it’s a smart choice when you want to free up memory for other computations or when working with resource-constrained environments.

To use this You must explicitly specify this storage level using the persist() method:

Syntax

fabricofdata_df.persist(StorageLevel.DISK_ONLY)

For demonstration purposes, we created a random DataFrame and persisted it using the DISK_ONLY storage level.

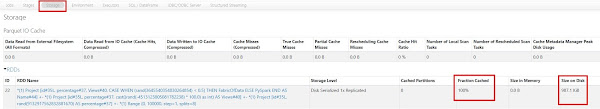

Once we run the job, we can inspect the Spark UI (Job 9 in this case) to see how the data was stored.

From the Spark UI (as shown in the screenshot), we can see the following:

Fraction Cached: 100% — This means the entire dataset was successfully stored.

Size on Disk: 987.1 KB — Indicates the physical size of the stored data.

Size in Memory: 0.0 B — Confirms that no memory was used, as expected.

✅ Advantages

- Memory-efficient: Keeps memory free for other tasks, which is useful especially when executor memory is limited.

- Fault-tolerant: Since the data is stored on disk, Spark can recover it even if a node fails.

⚠️ Important Notes

- Slower Access: Reading from disk is slower than accessing data in memory.

- Storage Requirements: Make sure you have sufficient disk space, especially when working with large datasets.

Since Python by default stores the data/objects in serialized format, we are seeing "Disk Serialized" under the Storage level.

If you want to change this default behavior and store the objects in deserialized format

(In deserialized, the data is stored in its original object format—not converted to bytes like in serialization),

you need to persist the data using the StorageLevel constructor.

Syntax

StorageLevel(useDisk: bool, useMemory: bool, useOffHeap: bool, deserialized: bool, replication: int = 1)

So to store the data in deserialized format, follow below code,

Fabricofdata_df.persist(StorageLevel(True,False,False,True,1))

DISK_ONLY_2:

The DISK_ONLY_2 storage level functions similarly to DISK_ONLY, but with an added layer of fault tolerance. In this mode, Spark replicates each partition of the data to two different nodes, meaning each piece of data is stored twice on disk.

This replication ensures that even if one node fails, Spark can still access the backup copy from another node—making it ideal for distributed systems that prioritize reliability.

Syntax

fabricofdata_df.persist(StorageLevel.DISK_ONLY_2)

Let’s reuse the previous example and change the storage level to DISK_ONLY_2. After running the action (e.g., .count()), we can inspect the Spark UI under the Storage tab.

From the screenshot we can see the following:

- Fraction Cached: 100% — This means the entire dataset was successfully stored.

- Size on Disk: 987.1 KB — Indicates the physical size of the stored data.

- Size in Memory: 0.0 B — Confirms that no memory was used, as expected.

- Storage Level: Disk Serialized 2x Replicated — Confirms the data was replicated twice.

✅ Advantages

- Enhanced fault tolerance: Even if one node goes down, Spark can still retrieve the data from the replicated copy stored on another node. This adds an extra layer of reliability to your pipeline.

⚠️ Important Notes

- Increased disk usage: Since the data is replicated, the disk space required is approximately double that of the

DISK_ONLYstorage level. Make sure to account for this when choosing this option, especially with large datasets. - Custom replication is possible: PySpark doesn’t limit you to just two copies. You can technically set

DISK_ONLY_3,DISK_ONLY_4, and so on—though in practice, this is rarely used. It’s only limited by your cluster’s available memory and disk space. - Cluster cost trade-off: Be cautious when increasing replication levels, as it demands more executor storage and processing capacity, which can significantly increase resource usage and cost.

To store the data in deserialized format, follow the code below:

Fabricofdata_df.persist(StorageLevel(True,False,False,True,2))

MEMORY_ONLY:

The MEMORY_ONLY storage level stores data in memory as Serialized Java objects. This allows Spark to access the data very quickly for future computations.

However, if the dataset is larger than the available memory, only a portion of it will be cached.

Any partitions that don't fit in memory will be recomputed on the fly whenever an action

(like .count() or .collect()) is triggered.

Syntax

fabricofdata_df.persist(StorageLevel.MEMORY_ONLY)

Let’s reuse the previous example and change the storage level to MEMORY_ONLY. After running the action (e.g., .count()), we can inspect the Spark UI under the Storage tab.

From the screenshot we can see the following:

- Fraction Cached: 100% — Confirms that the full dataset was successfully stored in memory.

- Size on Disk: 0 KB — Since this level doesn’t use disk, no data is written to storage.

- Size in Memory: 987.1 KB — Indicates the size of the data held in memory.

✅ Advantages

- High Performance: Since the data is stored in memory, reading it is much faster than reading from disk.

- No input/output overhead.

⚠️ Important Notes

- Requires sufficient memory: If your memory is limited, Spark will only cache what fits. The rest of the data will be recomputed each time it’s needed.

- Not fault-tolerant: If a node fails, the data stored in memory is lost. Spark will need to recompute the missing partitions from the original DAG, which can affect performance.

To store the data in deserialized format, follow the code below:

Fabricofdata_df.persist(StorageLevel(False,True,False,True,1))

Memory_ONLY_2:

MEMORY_ONLY_2 is similar to MEMORY_ONLY, but with an added benefit: data replication across two nodes. This means each partition of the dataset is stored twice in memory, improving fault tolerance in case one node becomes unavailable.

Syntax

fabricofdata_df.persist(StorageLevel.MEMORY_ONLY_2)

Let’s reuse the previous example and change the storage level to MEMORY_ONLY_2. After running the action (e.g., .count()), we can inspect the Spark UI under the Storage tab.

From the screenshot we can see the following:

- Fraction Cached: 100% — Confirms that the entire dataset was successfully stored in memory.

- Size on Disk: 0 KB — No disk usage, as expected with a memory-only strategy.

- Size in Memory: 987.4 KB — Indicates the amount of memory used to store the replicated dataset.

- Storage Level: Memory Serialized 2x Replicated — Confirms that each partition is duplicated in memory across different nodes.

✅ Advantages

- Improved fault tolerance: If one executor fails, the replicated data is still available from another node.

- Fast data access: Just like MEMORY_ONLY, data retrieval is extremely fast since it’s all in memory.

⚠️ Important Notes

- Higher memory consumption: Because each partition is stored twice, this approach uses double the memory. Make sure your cluster has enough memory to support this.

- No fallback to disk: If memory is insufficient, unfit partitions will be recomputed instead of being stored elsewhere.

- Replication beyond 2: PySpark supports further replication (like MEMORY_ONLY_3), but this should be used carefully as it increases memory demand and can drive up cluster costs.

To store the data in deserialized format, follow the code below:

Fabricofdata_df.persist(StorageLevel(False,True,False,True,2))

MEMORY_AND_DISK:

The MEMORY_AND_DISK storage level is a hybrid strategy. It attempts to store data in memory as long as there's enough space. If the data doesn’t fit entirely in memory, the remaining partitions are automatically stored on disk. This prevents recomputation for partitions that cannot be held in memory, which is a limitation with MEMORY_ONLY.

This makes MEMORY_AND_DISK especially useful when working with datasets that are too large to fully cache in memory.

Syntax

fabricofdata_df.persist(StorageLevel.MEMORY_AND_DISK)

Let’s reuse the previous example and change the storage level to MEMORY_AND_DISK. After running the action (e.g., .count()), we can inspect the Spark UI under the Storage tab.

Advantages:

- Flexible memory usage: Stores what fits in memory and pushes the rest to disk, avoiding recomputation.

- Reduced risk of out-of-memory errors: Compared to

MEMORY_ONLY, this level minimizes memory overflow issues. - Fallback to disk: Ensures that all data is still available for computation, even if not all of it fits in memory.

Just like other options, this also supports replication and deserialization. Use the code examples below:

# For Replication

fabricofdata_df.persist(StorageLevel.MEMORY_AND_DISK)

# For Deserialization

fabricofdata_df.persist(StorageLevel(True, True, False, True, 1))

# For Deserialization with replication

fabricofdata_df.persist(StorageLevel(True, True, False, True, 2))

Now that we’ve explored and understood the various storage levels supported in Apache Spark—along with their use cases and performance trade-offs—you’ll be well-prepared to tackle common interview questions on this topic.

Here are a few frequently asked questions. Click on them to reveal the answers:

📌 Q: What are the different types of storage levels supported in Spark? Briefly explain each of them.

📌 Follow-Up Q: When would you choose MEMORY_AND_DISK over MEMORY_ONLY?